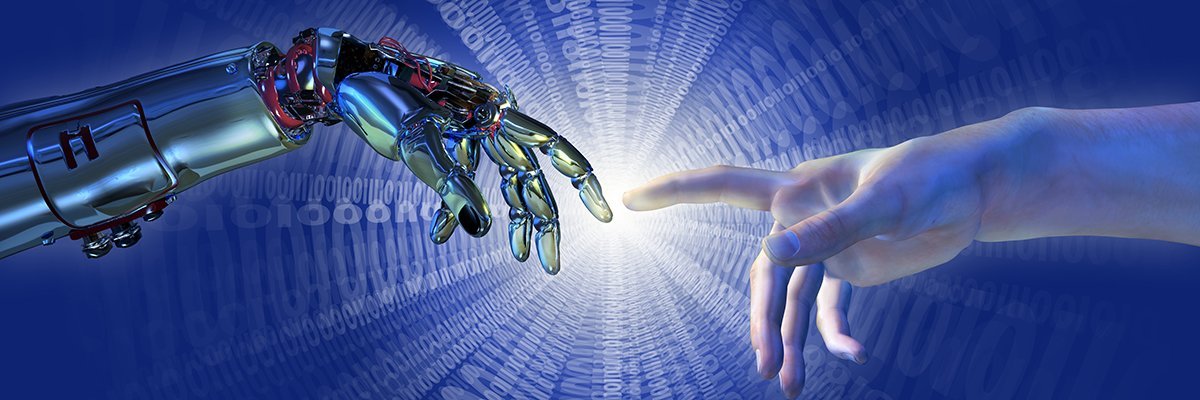

AlienCat – stock.adobe.com

Banks working with artificial intelligence technology face governance challenges to ensure AI-based decisions are fair and ethical

Three-quarters of banking executives think artificial intelligence (AI) will determine whether they succeed or fail, but they face major governance challenges, including ensuring decisions made by AI are fair and ethical.

A report from the Economist Intelligence Unit (EIU) said data bias that leads to discrimination against individuals or groups was one of the most prominent risks for banks using the technology.

AI is currently high on the banking agenda and the disruption caused by Covid-19 has “intensified” its adoption in the sector, according to the EIU. Banks are using AI to create more personalised banking services for customers, to automate back-office processes and to keep pace with developments at the digital challenger banks pioneering AI in the sector.

But banks will need to ensure ethical, fair and well-documented AI-based decisions, said the EIU’s Overseeing AI: Governing artificial intelligence in banking report. It said decisions made by computers must remain ethical, without bias, and these decisions need to be explainable.

To achieve these goals, AI should be ethical by design, data should be monitored for accuracy and integrity, development processes need to be well documented and banks need to ensure they have the right level of skills.

Pete Swabey, editorial director for Europe, the Middle East and Africa (EMEA) – Thought Leadership at the EIU, said: “AI is seen as a key competitive differentiator in the sector. Our study, drawing on the guidance given by regulators around the world, highlights the key governance challenges banks must address if they are to capitalise on the AI opportunity safely and ethically.”

A potential lack of human oversight is a major concern, according to the EIU. It said recruiting and training people with the right skills should be a priority: “Banks must ensure the right level of AI expertise across the business to build and maintain AI models, as well as oversee these models.”

But as pioneers of IT, and with their wealth of IT skills and research funds, banks are in a good position to set standards is AI development.

Prag Sharma, senior vice-president of Citi’s Innovation Labs, said: “Bias can creep into AI models in any industry, but banks are better positioned than most types of organisation to combat it. Maximising algorithms’ explainability helps to reduce bias.”

Adam Gibson, co-founder of AI ecosystem builder Skymind Global Ventures, said it was important to first define what ethics means to your product so you have guidelines to follow when you are designing AI applications.

“Also, you need diversity of thought and cross-functional teams to look at all aspects of the services you are building throughout its lifecycle,” he said.

“Collaboration with different people from different backgrounds is key to minimising bias and ethically questionable activity during the AI decision-making process. This doesn’t just include the people with the skills to build the tech, but also the users of the services. They will know first-hand any biases and will be able to flag them during the design process before the AI services are launched.”

Gibson added that AI is built through datasets, and often racist AI models come from racist datasets. “This is what we need to be aware of to help minimise and protect us from innovation that isn’t beneficial to society,” he said.

Content Continues Below

Read more on Artificial intelligence, automation and robotics

![]()

AI bias and privacy issues require more than clever tech

By: Cliff Saran

![]()

Accountability is the key to ethical artificial intelligence, experts say

By: Sebastian Klovig Skelton

![]()

How to build a chatbot with personality and not alienate users

By: David Teich

![]()

Nordic public dispels fears over AI and welcomes its advance